Leaderboard

-

in all areas

- All areas

- Blog Entries

- Blog Comments

- Images

- Image Comments

- Image Reviews

- Albums

- Album Comments

- Album Reviews

- Files

- File Comments

- File Reviews

- Events

- Event Comments

- Event Reviews

- Topics

- Posts

- Pokédex Entries

- Articles

- Article Comments

- Technical Documents

- Technical Document Comments

- Pages

- Tutorials

- Tutorial Comments

- Status Updates

- Status Replies

-

Custom Date

-

All time

November 30 2016 - February 15 2026

-

Year

February 15 2025 - February 15 2026

-

Month

January 15 2026 - February 15 2026

-

Week

February 8 2026 - February 15 2026

-

Today

February 15 2026

-

Custom Date

04/28/23 - 04/28/23

-

All time

Popular Content

Showing content with the highest reputation on 04/28/23 in all areas

-

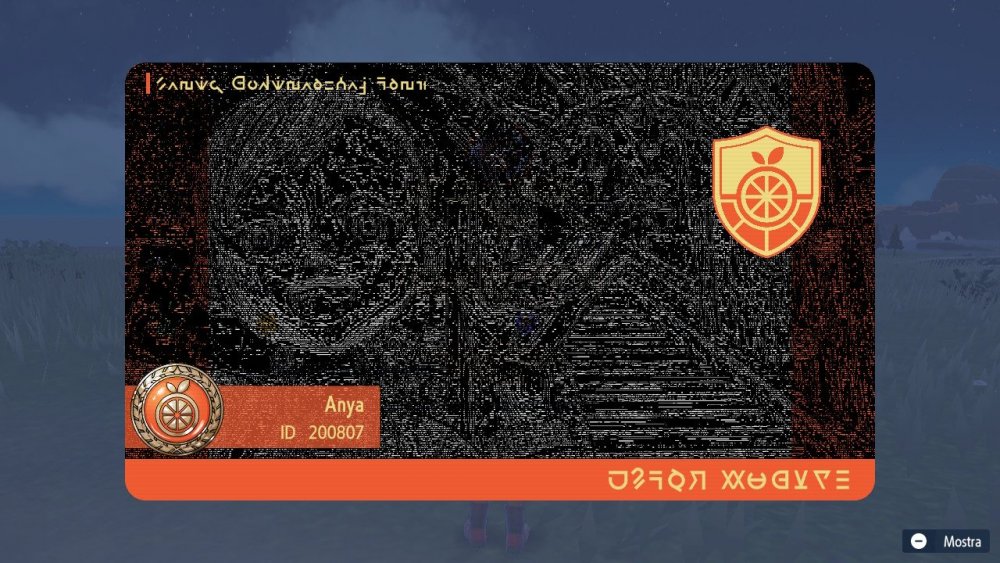

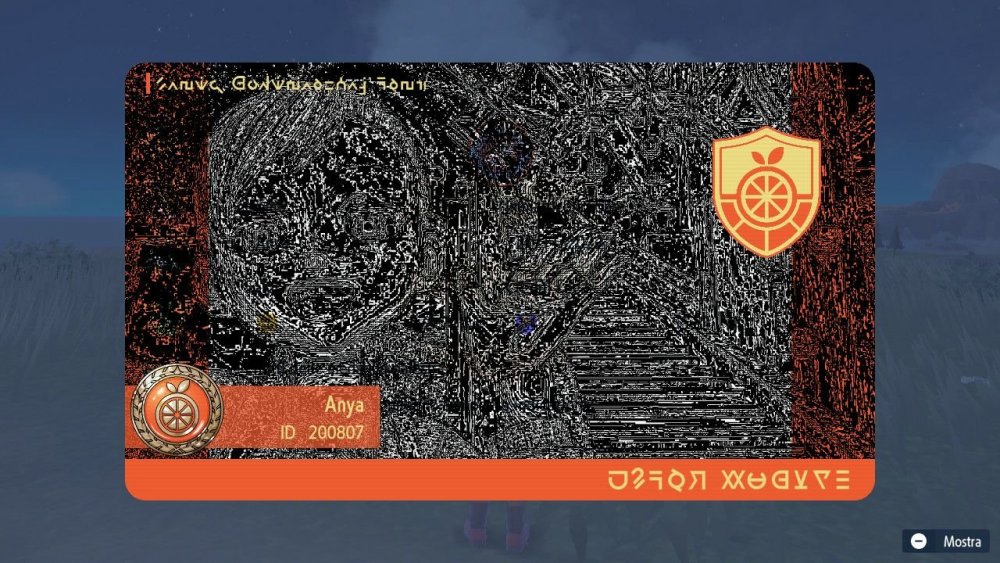

Probably last update I kept experimenting and I made some acceptable results, even if nothing is to be taken for sure since the true algorithm will never be released to the pubilc. The original image: The new experiment: it was obatained as it follows: save the light image and the dark image, save also the light mask and the dark mask and make a third mask with bitwise pixel by pixel OR of the two original masks (the mask have to be read as grayscale images, normalizing the value of each pixel between 0 - 255). Then for each pixel of the 2 initial images we do alpha blending taking as alpha the corrisponding pixel value of the or mask. So for the 3 channels we'll have the following: #assuming r,g,b as the three channels and the two images expressed as a list of tuples (r,g,b), where each tuple is a pixel, the lists are named lightIM and darkIM; in addition the mask is a list of values between 0-255 named mask. The final image is an empty list named finalIM for px in range(len(lightIM)): alpha = mask[px] newR = int(lightIM[px][0] * (1 - alpha) + darkIM[px][0] * alpha) newG = int(lightIM[px][1] * (1 - alpha) + darkIM[px][1] * alpha) newB = int(lightIM[px][2] * (1 - alpha) + darkIM[px][2] * alpha) finalIM.append((newR,newG,newB)) image = Image.new("RGB", (int(width/4), int(height/4))) image.putdata(finalIM) image.save("final.png") Obtaining these: The old algebrical pixel by pixel average: As you can see they look pretty similar, with the new experiment being a little smoother, but maybe the overworld light isn't completely correct. Anyway I leave this here in order to be reasearched further by whoever wants to give a try or implement it in any form. I'll stop for some time on this topic, but I'll still keep an eye when I'd be able to.1 point

-

Single blocks display First two bytes constitute the basic low res image Other two bytes for the dark image aren't shown with the first two bytes black, so it's possible that a logic AND is performed between the first two groups of bytes The two masks perfectly overlap in the image I put in the previous reply, furthermore it's very likely they need to be read as greyscale since they are both black and white. Also they are probably there as alpha channel balancing to make the original image look smoother EDIT: I can nearly confirm that light image and dark image have to be alpha blended to make the final image. With this logic is explainable why performing statisthical mean pixel by pixel works, since it represents an alpha blending with alpha fixed at 0.5. Now it needs to be understood how to apply the composite mask for blending. My guess was performing arithmetically the alpha blending pixel by pixel using as alpha the luminance intensity of the corrisponding pixel in the mask. Another way could be computing a fixed alpha as number of white pixels / number ot total pixels in the mask.1 point

-

Other source material I truncated the photo block to see how the game directly interprets the various components. First one is the normal photo Second one is photo without masks (just bytes 0-1-2-3), and looks pixelated/low res Third one is photo with only masks (bytes 4-5-6-7), could be a XOR between the two masks1 point

-

Appearently computing the mean between the first two images (for each pixel of the 2 images: (pxIM1 + pxIM2) // 2) gives good results. Still figuring out what the last 2 images do. Also, given the values in the resolution blocks, I started to think that it could be a custom algorithm for subsampling. News will follow.1 point